Detecting Nonexistent Pedestrians

National Tsing-Hua University

In ICCV 2017 workshop

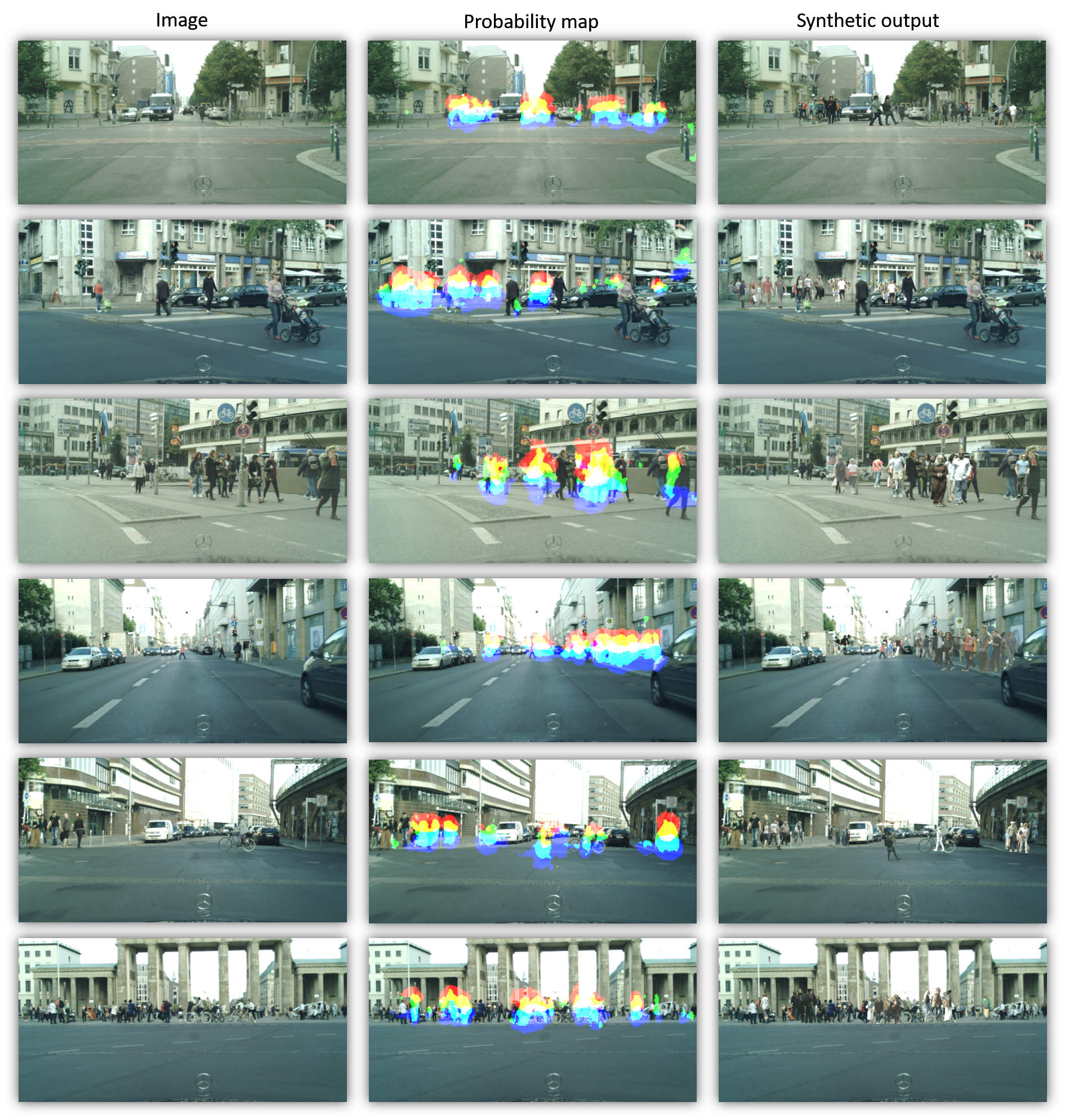

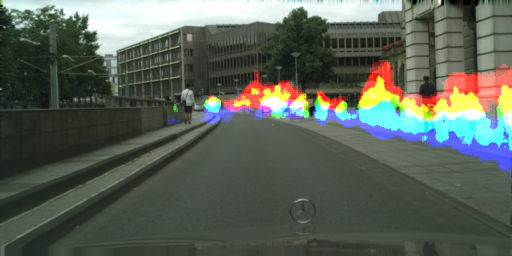

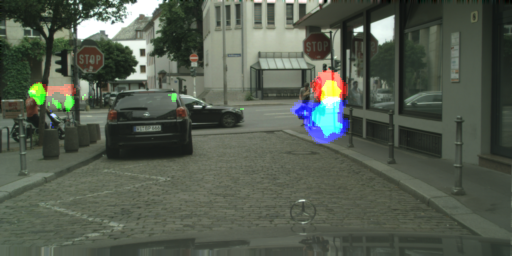

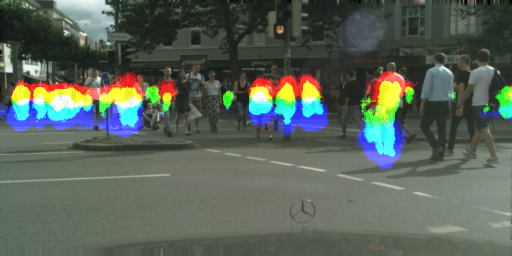

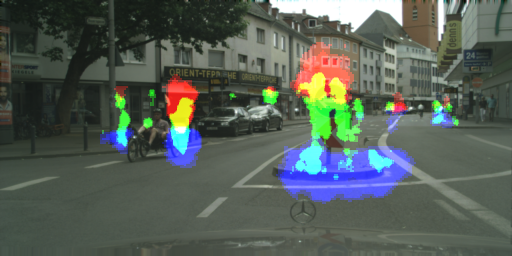

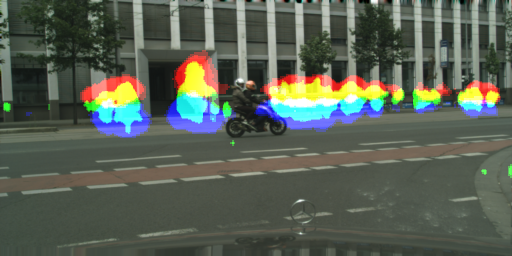

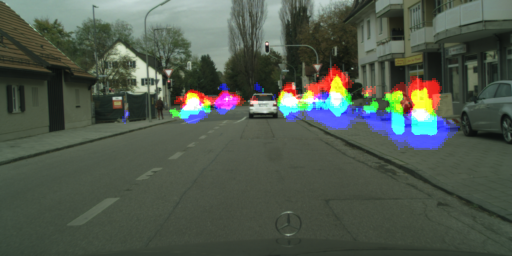

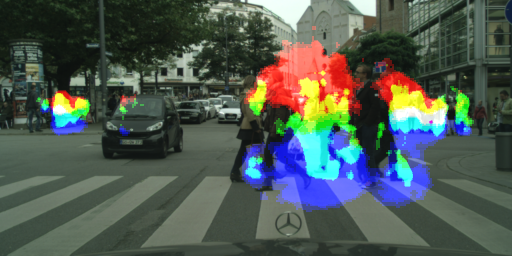

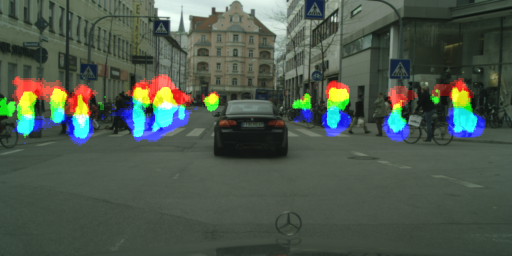

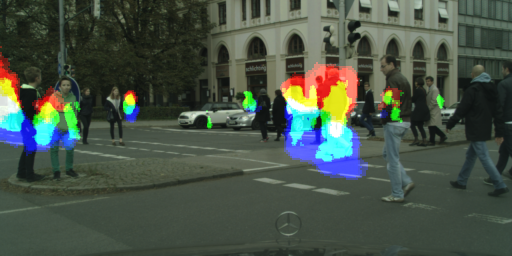

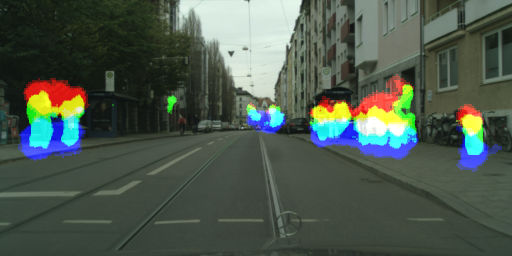

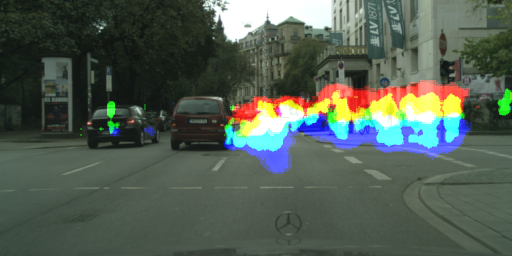

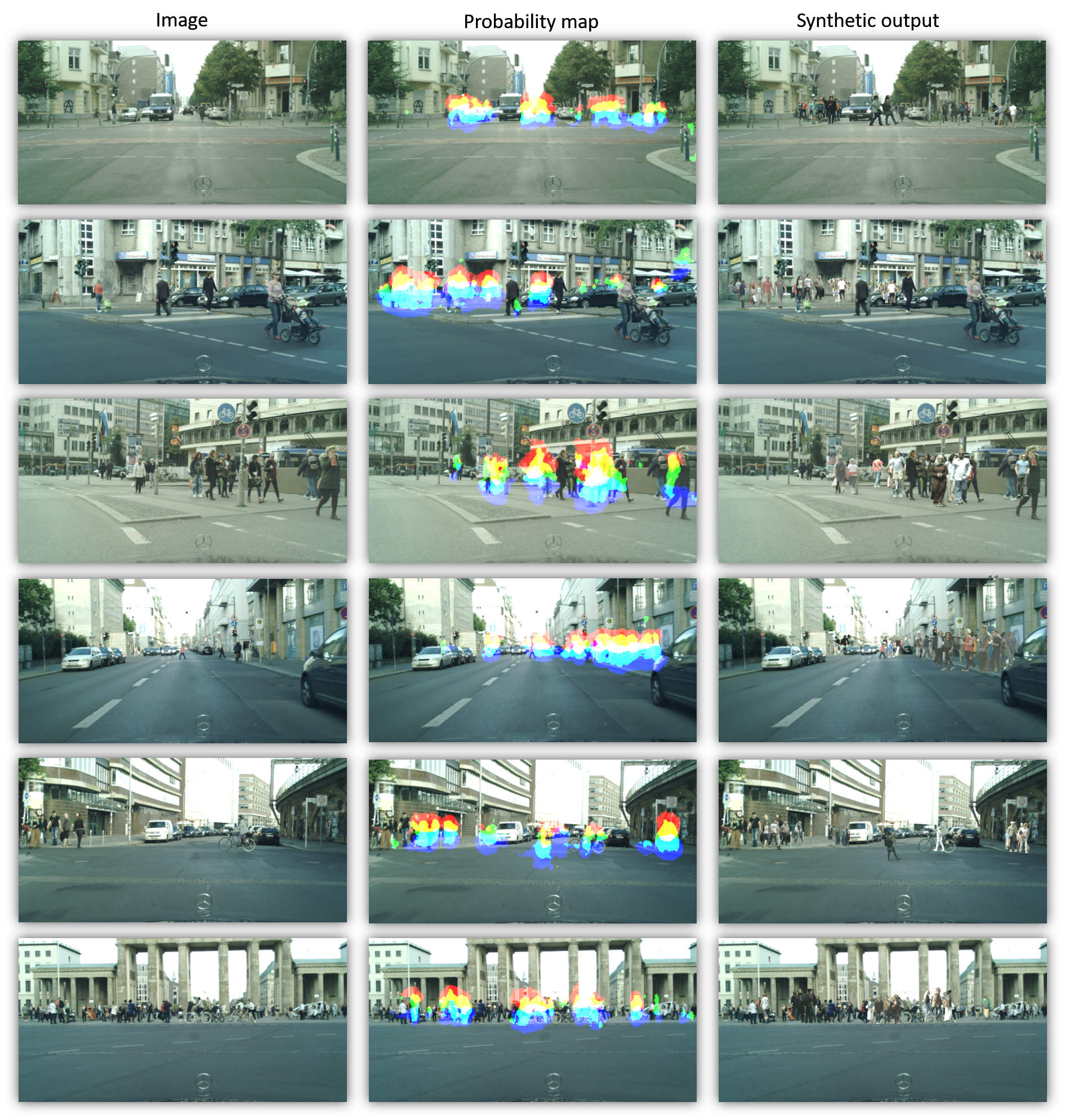

Examples of the synthesis pipeline. We adjust the image brightness for better visualization. From left to right: input images, predicted heatmaps, and synthesized images with phantom pedestrians according to the predicted heatmaps. The likelihoods of head and feet positions are depicted in red and blue

Abstract

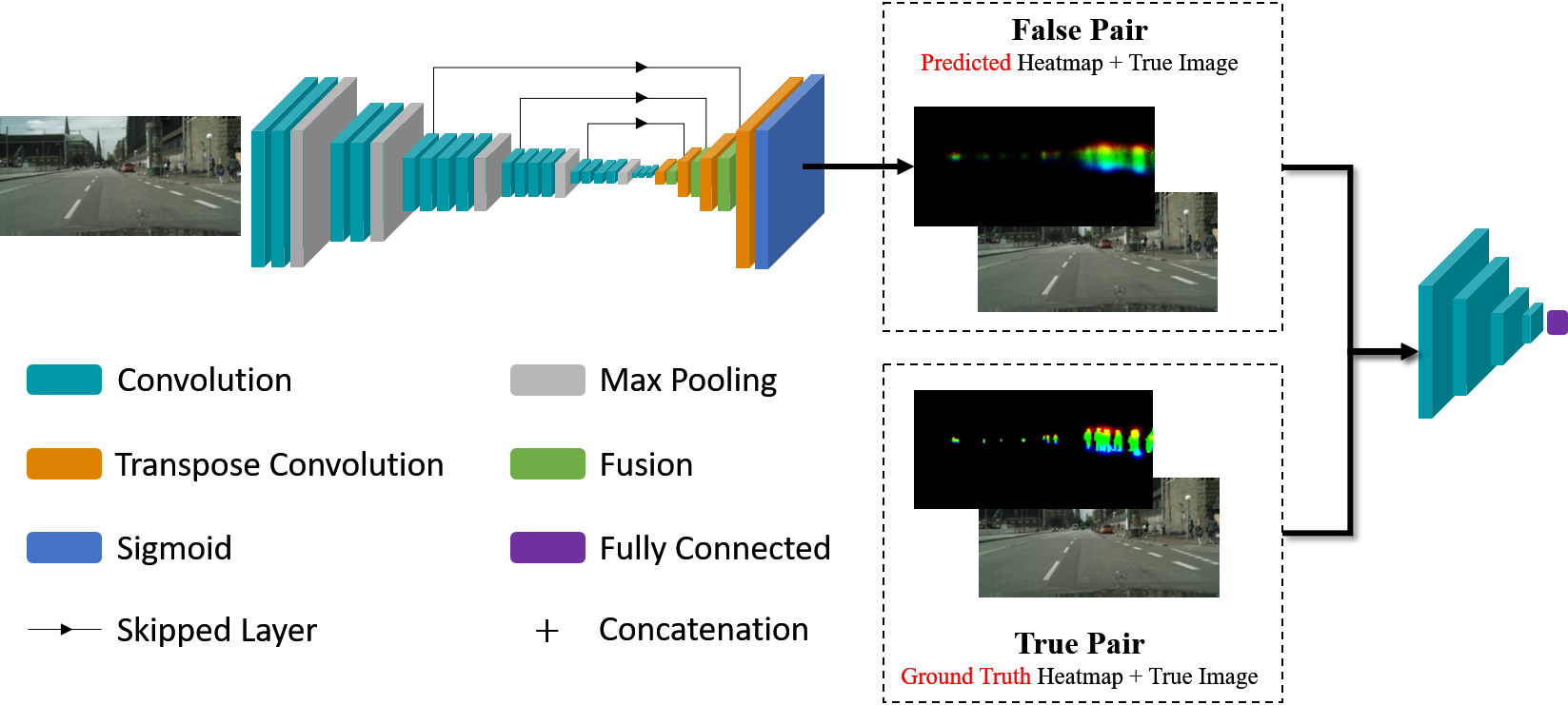

We explore beyond object detection and semantic segmentation, and propose to address the problem of estimating the presence probabilities of nonexistent pedestrians in a street scene.Our method builds upon a combination of generative and discriminative procedures to achieve the perceptual capability of figuring out missing visual information. We adopt state-of-the-art inpainting techniques to generate the training data for nonexistent pedestrian detection. The learned detector can predict the probability of observing a pedestrian at some location in image, even if that location exhibits only the background.We evaluate our method by inserting pedestrians into images according to the presence probabilities and conducting user study to determine the `realisticness' of synthetic images. The empirical results show that our method can capture the idea of where the reasonable places are for pedestrians to walk or stand in a street scene.

Recent Related Work

Xiaolong Wang*, Rohit Girdhar*, and Abhinav Gupta. Binge Watching: Scaling Aff ordance Learning from Sitcoms. Proc. of IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017 (spotlight) (*indicates equal contributions.)

[pdf]

Shiyu Huang and Deva Ramanan Expecting the Unexpected: Training Detectors for Unusual Pedestrians with Adversarial Imposters Proc. of IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017.

[pdf]

Jin Sun and David Jacobs. Seeing What Is Not There: Learning Context to Determine Where Objects Are Missing. Proc. of IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017 (spotlight).

[pdf]

Junting Pan, Cristian Canton Ferrer, Kevin McGuinness, Noel O'Connor, Jordi Torres, Elisa Sayrol and Xavier Giro-i-Nieto. SalGAN: Visual Saliency Prediction with Generative Adversarial Networks Proc. of IEEE Conference on Computer Vision and Pattern Recognition Scene Understanding Workshop (CVPR SUNw), 2017 (spotlight).

[pdf]

Namhoon Lee, Xinshuo Weng, Vishnu Naresh Boddeti, Yu Zhang, Fares Beainy, Kris Kitani and Takeo Kanade. Visual Compiler: Synthesizing a Scene-Specific Pedestrian Detector and Pose Estimator .

[pdf]